Machine Learning in AI Interview Questions

Table Of Contents

- What is machine learning

- Machine learning model

- Supervised, Unsupervised, and Reinforcement learning

- Explain the concept of overfitting and underfitting in machine learning. How do you mitigate them?

- What are ensemble methods in machine learning, and how do bagging and boosting differ?

- Explain how a decision tree algorithm works and when you would use it.

- Describe how k-nearest neighbors (KNN) works and its key limitations.

- What is a recurrent neural network (RNN), and how is it applied to time-series data?

- How do you deal with imbalanced datasets in a classification problem?

- What are the differences between precision, recall, F1-score, and ROC-AUC, and when would you use each?

- How do you deploy machine learning models in production, and what are the challenges?

If you’re preparing for a Machine Learning in AI interview, you’re likely to face questions that test both your theoretical knowledge and practical skills. Interviewers will dive into topics like supervised and unsupervised learning, neural networks, decision trees, and key machine learning concepts. They’ll also want to know how proficient you are in Python, R, or Java, as these are the most common programming languages used in machine learning. Expect scenario-based questions that challenge your ability to solve real-world problems using these tools.

In this guide, I’ve gathered some of the most important Machine Learning in AI interview questions to help you ace your interview and stand out. Whether you’re a beginner or have years of experience, this content will equip you with the knowledge and confidence to handle tough interview scenarios. With machine learning experts earning an average salary between $110,000 and $150,000 annually, this is a high-demand field where preparation truly pays off. Let’s dive in and get you ready to land that job!

Curious about AI and how it can transform your career? Join our free demo at CRS Info Solutions and connect with our expert instructors to learn more about our AI online course. We emphasize real-time project-based learning, daily notes, and interview questions to ensure you gain practical experience. Enroll today for your free demo and embark on your path to becoming an AI professional!

1. What is machine learning, and how does it differ from traditional programming?

Machine learning is a branch of artificial intelligence that allows systems to learn from data and improve their performance without being explicitly programmed. In traditional programming, we write a set of predefined rules and instructions for the computer to follow. However, in machine learning, the model learns patterns from data and makes decisions or predictions based on that data. Instead of hard-coding every rule, we let the machine discover those rules through training.

The main difference between traditional programming and machine learning is that traditional programming relies on clear, human-defined instructions, while machine learning enables systems to learn and adapt autonomously from data. Traditional systems follow exact rules, but machine learning models rely on data-driven insights and evolve as they receive more input, improving their predictions or classifications over time.

Here’s a simple implementation of a machine learning model using Python and the scikit-learn library:

from sklearn.linear_model import LinearRegression

import numpy as np

# Sample data: years of experience and corresponding salaries

X = np.array([[1], [2], [3], [4], [5]]) # Independent variable

y = np.array([30000, 40000, 50000, 60000, 70000]) # Dependent variable

# Create and train the model

model = LinearRegression()

model.fit(X, y)

# Make a prediction for a new data point

new_experience = np.array([[6]])

predicted_salary = model.predict(new_experience)

print(f"Predicted salary for 6 years of experience: ${predicted_salary[0]:,.2f}")See also: Beginner AI Interview Questions and Answers

2. Can you explain the difference between supervised, unsupervised, and reinforcement learning?

Supervised learning involves training a model on a labeled dataset, meaning that the input data comes with corresponding output labels. The goal is for the model to learn a mapping from inputs to outputs so it can predict labels for unseen data. For example, a supervised learning model might be trained to classify images as either “cat” or “dog,” using a labeled dataset of images. Common algorithms in this type include linear regression, decision trees, and neural networks.

On the other hand, unsupervised learning deals with unlabeled data. The model is tasked with finding hidden patterns or intrinsic structures in the data. A classic example is clustering, where the model groups similar items without knowing what the correct groups are. Reinforcement learning, unlike the other two, focuses on an agent interacting with an environment. The agent learns through trial and error, receiving rewards or penalties for its actions, and its objective is to maximize cumulative rewards over time. This is commonly seen in game-playing AI or robotics.

See also: Artificial Intelligence interview questions and answers

3. What is a machine learning model, and how do you choose the right one for a given problem?

A machine learning model is a mathematical representation or function that is trained to recognize patterns in data. It learns from historical data and can make predictions or decisions when presented with new, unseen data. The model takes in input data and applies algorithms to make predictions based on learned patterns. Choosing the right model depends on various factors such as the type of problem (classification, regression, etc.), the amount of available data, and the computational resources you have.

For instance, if you are working on a classification problem with structured data, you might start with algorithms like logistic regression, SVM, or decision trees. On the other hand, if your task involves recognizing images, convolutional neural networks (CNNs) would be more suitable. You also need to consider how interpretable the model needs to be. Simpler models like linear regression might be easier to interpret but less powerful, while complex models like deep neural networks can handle larger datasets and nonlinear relationships but may be harder to understand.

4. Describe the steps involved in the machine learning pipeline.

The machine learning pipeline is a step-by-step process for developing machine learning models. The first step is data collection, where you gather the raw data required for your problem. Next comes data preprocessing, which involves cleaning the data, handling missing values, and transforming it into a format suitable for the model. This step might also include feature engineering, where I would extract or create new features from the raw data to enhance model performance.

After preprocessing, I would split the dataset into training and testing sets. The model is trained on the training set, and its performance is evaluated on the test set. Once the model is trained, I proceed to hyperparameter tuning, where I optimize the model’s parameters for better performance. Finally, the trained model is evaluated using metrics like accuracy, precision, or F1 score, depending on the problem type. Once satisfied, I deploy the model into production, where it can start making predictions on real-world data. This entire pipeline needs to be continuously monitored and maintained to ensure the model performs optimally over time.

See also: AI Interview Questions and Answers for 5 Year Experience

5. How do you evaluate the accuracy of a machine learning model?

Evaluating the accuracy of a machine learning model depends on the type of problem you are solving. For classification problems, I would typically use accuracy, which measures the percentage of correctly predicted instances. However, accuracy alone isn’t always enough, especially when dealing with imbalanced datasets. In such cases, metrics like precision, recall, and F1 score become important. Precision tells me how many of my positive predictions were correct, while recall measures how well I identified all relevant instances. The F1 score provides a balance between precision and recall.

For regression tasks, I would use metrics such as mean squared error (MSE), root mean squared error (RMSE), or R-squared to assess the model’s performance. The goal here is to minimize the error between predicted and actual values. Cross-validation is another crucial step where I split the data into multiple subsets, train the model on some and test on others. This helps me gauge how well the model generalizes to unseen data and reduces the risk of overfitting.

6. What is the difference between classification and regression? Provide examples.

Classification and regression are both types of supervised learning problems, but they solve different tasks. In classification, the goal is to predict discrete labels or categories. For example, I might build a model to classify emails as “spam” or “not spam,” or to categorize customer reviews as positive or negative. Some common algorithms for classification include logistic regression, decision trees, and random forests.

On the other hand, regression predicts continuous values. A classic example is predicting housing prices based on features such as square footage, location, and number of bedrooms. The output is a real number rather than a category. Algorithms commonly used for regression tasks include linear regression, support vector regression, and decision tree regression. The key difference lies in the nature of the predicted value – discrete for classification and continuous for regression.

See also: Artificial Intelligence Scenario Based Interview Questions

7. Explain the concept of overfitting and underfitting in machine learning. How do you mitigate them?

Overfitting occurs when a machine learning model learns too well from the training data, capturing not only the general patterns but also the noise and random fluctuations. As a result, the model performs extremely well on the training data but poorly on unseen data, as it fails to generalize. On the other hand, underfitting happens when the model is too simple to capture the underlying patterns in the data. In this case, it performs poorly on both training and test datasets.

To mitigate overfitting, I would use techniques like cross-validation, regularization (L1 or L2), or reduce the model complexity by pruning decision trees or limiting the number of features. For underfitting, I can try using a more complex model or adding more features to capture the data better. It’s all about finding the right balance where the model generalizes well without being too complex or too simple.

8. What is cross-validation, and why is it important in model evaluation?

Cross-validation is a technique used to evaluate the generalization performance of a machine learning model. Instead of relying on a single train-test split, I divide the dataset into multiple subsets or “folds.” For example, in k-fold cross-validation, the data is split into k equal parts. I train the model on k-1 folds and test it on the remaining fold, repeating this process k times. The final performance is the average across all k folds.

This approach ensures that the model is tested on different subsets of the data, reducing the likelihood of overfitting and providing a more robust evaluation. Cross-validation helps me get a better sense of how well my model will perform on unseen data and whether it is generalizing well beyond the training set.

9. How do you handle missing or corrupted data in a dataset?

Handling missing or corrupted data is crucial because poor-quality data can significantly affect the performance of a machine learning model. The first step I take is to analyze the extent and pattern of the missing data. If the missing values are minimal and random, I might choose to remove rows with missing values. However, this isn’t always ideal, especially if a large portion of data is affected.

Another common approach is imputation, where I replace missing values with the mean, median, or mode of the column. In some cases, I may use more advanced techniques like k-nearest neighbors (KNN) imputation or even build a model to predict the missing values. The method I choose depends on the data and the context, but the goal is to maintain data integrity while minimizing bias.

See also: NLP Interview Questions

10. What is feature engineering, and why is it important in building machine learning models?

Feature engineering is the process of creating new input features from the raw data that will improve the model’s performance. It’s a critical step in machine learning because better input features often lead to better model accuracy. Through feature engineering, I might transform existing features (e.g., taking the logarithm of skewed data), combine features, or extract useful information from complex data types, like dates or text.

For example, when working with a dataset that includes a timestamp, I could extract features like day of the week, hour, or season to help the model understand temporal patterns. Effective feature engineering can significantly improve model performance, sometimes more than choosing a more complex algorithm. It’s often said that good features make good models.

11. Can you explain the bias-variance tradeoff in machine learning?

The bias-variance tradeoff is a fundamental concept in machine learning that explains the balance between a model’s ability to generalize and its accuracy on training data. Bias refers to the error introduced by making simplistic assumptions in the model, leading to underfitting. On the other hand, variance refers to the error introduced by the model being too sensitive to fluctuations in the training data, resulting in overfitting.

The goal is to find the right balance between bias and variance. If a model is too simple, it will have high bias and low variance, meaning it will perform poorly on both training and test data. If it’s too complex, it will have low bias but high variance, leading to excellent performance on training data but poor generalization on new data. Techniques like cross-validation, regularization, and reducing model complexity help strike the right balance.

See also: Intermediate AI Interview Questions and Answers

12. What are ensemble methods in machine learning, and how do bagging and boosting differ?

Ensemble methods combine multiple models to improve overall performance, taking advantage of the strengths of individual models. The idea is that a group of weak learners can come together to create a strong learner. There are two main types of ensemble methods: bagging and boosting. Both aim to reduce variance, but they work differently.

Bagging (Bootstrap Aggregating) involves training multiple models independently on random subsets of the data and then averaging their predictions. An example of bagging is the Random Forest algorithm. Boosting, on the other hand, trains models sequentially. Each new model focuses on the mistakes made by the previous one, gradually improving performance. An example of boosting is the Gradient Boosting algorithm. Bagging reduces variance by combining models, while boosting reduces bias by focusing on improving each iteration.

13. Explain how a decision tree algorithm works and when you would use it.

A decision tree is a supervised learning algorithm used for both classification and regression tasks. It works by recursively splitting the dataset into subsets based on the most significant feature at each node. Each internal node of the tree represents a decision based on a feature, and the branches represent possible outcomes. The process continues until the model reaches a leaf node, which represents a final prediction or decision.

I would use decision trees when I need a model that is easy to interpret and doesn’t require extensive data preprocessing. For example, decision trees are well-suited for classification problems where I need to explain why certain decisions were made. However, decision trees can easily overfit, especially when the tree becomes too deep, so I often prune them or use ensemble methods like Random Forests to improve performance.

Here’s a simple decision tree implementation:

from sklearn.tree import DecisionTreeClassifier

from sklearn import tree

# Create and train a decision tree classifier

dt_model = DecisionTreeClassifier()

dt_model.fit(X_train, y_train)

# Visualize the decision tree

plt.figure(figsize=(10, 6))

tree.plot_tree(dt_model, filled=True)

plt.title("Decision Tree Visualization")

plt.show()

# Make predictions

predictions = dt_model.predict(X_test)

accuracy = dt_model.score(X_test, y_test)

print(f"Decision Tree accuracy: {accuracy:.2f}")See also: Advanced AI Interview Questions and Answers

14. What is a random forest, and how does it improve upon decision trees?

A Random Forest is an ensemble learning method that combines multiple decision trees to improve accuracy and reduce overfitting. Each tree is built from a random subset of the data, and the final prediction is made by averaging the predictions of all the trees (for regression) or by majority vote (for classification).

Here’s a simple example of using Random Forest for classification:

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

# Load the iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Create and train a Random Forest classifier

rf_model = RandomForestClassifier(n_estimators=100)

rf_model.fit(X_train, y_train)

# Evaluate the model

accuracy = rf_model.score(X_test, y_test)

print(f"Random Forest accuracy: {accuracy:.2f}")15. Describe how k-nearest neighbors (KNN) works and its key limitations.

KNN is a simple, instance-based learning algorithm that classifies new instances based on the majority class among the k-nearest neighbors in the training set. It calculates the distance (commonly Euclidean) between points to find the nearest neighbors.

Here’s a code snippet to demonstrate KNN:

from sklearn.neighbors import KNeighborsClassifier

from sklearn.datasets import load_iris

# Load the iris dataset

iris = load_iris()

X = iris.data

y = iris.target

# Create a KNN classifier

knn_model = KNeighborsClassifier(n_neighbors=3)

# Fit the model

knn_model.fit(X, y)

# Make a prediction

predicted = knn_model.predict([[5.0, 3.6, 1.4, 0.2]])

print(f"Predicted class: {predicted[0]}")Key Limitations of KNN:

- Computational Cost: KNN can be slow, especially with large datasets since it calculates distances to all training samples.

- Curse of Dimensionality: The performance decreases in high-dimensional spaces.

- Sensitivity to Noise: KNN is sensitive to noisy data and outliers.

16. What are support vector machines (SVM), and how do they classify data?

Support Vector Machines (SVM) are supervised learning models used for classification and regression. They work by finding the hyperplane that best separates data points of different classes with the maximum margin. The data points closest to the hyperplane are called support vectors.

Here’s a simple implementation of SVM for classification:

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.svm import SVC

# Load dataset

iris = datasets.load_iris()

X = iris.data

y = iris.target

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Create and train the SVM model

svm_model = SVC(kernel='linear')

svm_model.fit(X_train, y_train)

# Evaluate the model

accuracy = svm_model.score(X_test, y_test)

print(f"SVM accuracy: {accuracy:.2f}")See also: Basic Artificial Intelligence interview questions and answers

17. How does gradient descent optimization work in training machine learning models?

Gradient Descent is an optimization algorithm used to minimize the loss function in machine learning models by iteratively adjusting the model parameters. It computes the gradient of the loss function concerning the parameters and updates the parameters in the opposite direction of the gradient.

Here’s a simple illustration of gradient descent in linear regression:

import numpy as np

# Sample data

X = np.array([[1], [2], [3], [4]])

y = np.array([[1], [2], [3], [4]])

# Parameters

m = 0.0 # slope

b = 0.0 # intercept

learning_rate = 0.01

n_iterations = 1000

# Gradient Descent

for _ in range(n_iterations):

y_pred = m * X + b

error = y_pred - y

m_gradient = (2 / len(X)) * np.dot(X.T, error)

b_gradient = (2 / len(X)) * np.sum(error)

m -= learning_rate * m_gradient

b -= learning_rate * b_gradient

print(f"Learned parameters: m={m}, b={b}")18. What is a neural network, and how does it relate to deep learning?

A Neural Network is a computational model inspired by the way biological neural networks work. It consists of layers of interconnected nodes (neurons), where each connection has a weight that is adjusted during training. Deep Learning is a subset of machine learning that uses neural networks with many layers (deep architectures) to model complex patterns in large datasets.

Here’s a basic example of a neural network using Keras:

from keras.models import Sequential

from keras.layers import Dense

# Create a simple neural network

model = Sequential()

model.add(Dense(32, activation='relu', input_shape=(10,))) # Input layer

model.add(Dense(1, activation='sigmoid')) # Output layer

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Assume X_train and y_train are defined

# model.fit(X_train, y_train, epochs=10, batch_size=32)19. How does a convolutional neural network (CNN) work, and where is it used?

Convolutional Neural Networks (CNNs) are specialized neural networks designed for processing grid-like data, such as images. They utilize convolutional layers that apply filters to extract features, followed by pooling layers to reduce dimensionality.

Here’s a basic structure of a CNN using Keras:

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Create a simple CNN

model = Sequential()

model.add(Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3))) # Convolutional layer

model.add(MaxPooling2D(pool_size=(2, 2))) # Pooling layer

model.add(Flatten()) # Flatten layer

model.add(Dense(128, activation='relu')) # Fully connected layer

model.add(Dense(1, activation='sigmoid')) # Output layer

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Assume X_train and y_train are defined

# model.fit(X_train, y_train, epochs=10, batch_size=32)Use Cases: CNNs are widely used in image recognition, video analysis, and image classification.

See also: Generative AI Interview Questions Part 1

20. What is a recurrent neural network (RNN), and how is it applied to time-series data?

Recurrent Neural Networks (RNNs) are a class of neural networks designed for sequential data, where current inputs depend on previous inputs. They maintain a hidden state that is updated at each time step, making them suitable for time-series data.

Here’s a simple RNN example using Keras:

from keras.models import Sequential

from keras.layers import SimpleRNN, Dense

# Create a simple RNN

model = Sequential()

model.add(SimpleRNN(50, input_shape=(timesteps, features))) # timesteps and features are defined

model.add(Dense(1)) # Output layer

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error')

# Assume X_train and y_train are defined

# model.fit(X_train, y_train, epochs=10, batch_size=32)21. Explain the concept of hyperparameter tuning and why it is critical in model performance.

Hyperparameter Tuning involves selecting the best hyperparameters for a model to optimize its performance. Hyperparameters are external to the model and are set before training (e.g., learning rate, number of trees in a random forest).

A common method for hyperparameter tuning is Grid Search:

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

# Define the model

rf_model = RandomForestClassifier()

# Define the parameter grid

param_grid = {

'n_estimators': [10, 50, 100],

'max_depth': [None, 10, 20]

}

# Grid search

grid_search = GridSearchCV(estimator=rf_model, param_grid=param_grid, scoring='accuracy', cv=5)

grid_search.fit(X_train, y_train)

print(f"Best parameters: {grid_search.best_params_}")See also: Generative AI Interview Questions Part 2

22. How do you deal with imbalanced datasets in a classification problem?

To handle imbalanced datasets, you can use techniques such as resampling (oversampling the minority class or undersampling the majority class) or employing different algorithms that are less sensitive to class imbalance.

Here’s an example using the SMOTE technique for oversampling:

from imblearn.over_sampling import SMOTE

from sklearn.model_selection import train_test_split

from collections import Counter

# Assume X and y are defined

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Print class distribution

print(f"Original dataset shape: {Counter(y_train)}")

# Apply SMOTE

smote = SMOTE()

X_resampled, y_resampled = smote.fit_resample(X_train, y_train)

# Print new class distribution

print(f"Resampled dataset shape: {Counter(y_resampled)}")23. What is dimensionality reduction, and how do techniques like PCA and t-SNE work?

Dimensionality Reduction is the process of reducing the number of features in a dataset while preserving its essential information. Techniques like PCA (Principal Component Analysis) and t-SNE (t-distributed Stochastic Neighbor Embedding) are commonly used for this purpose.

Here’s how you can implement PCA:

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

# Load the dataset

iris = load_iris()

X = iris.data

# Apply PCA

pca = PCA(n_components=2)

X_reduced = pca.fit_transform(X)

# Plot the reduced dataset

plt.scatter(X_reduced[:, 0], X_reduced[:, 1], c=iris.target)

plt.xlabel("Principal Component 1")

plt.ylabel("Principal Component 2")

plt.title("PCA of Iris Dataset")

plt.show()t-SNE is more effective for visualization in lower dimensions, especially for high-dimensional data, but is computationally expensive.

24. What are the differences between precision, recall, F1-score, and ROC-AUC, and when would you use each?

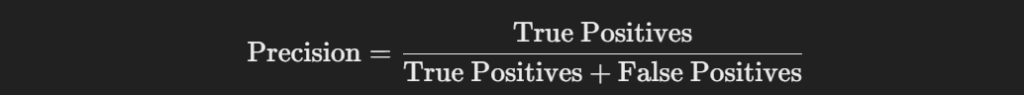

- Precision measures the accuracy of positive predictions:

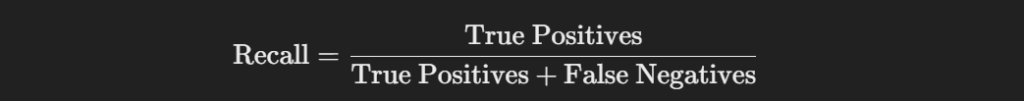

- Recall (Sensitivity) measures the ability to find all positive instances:

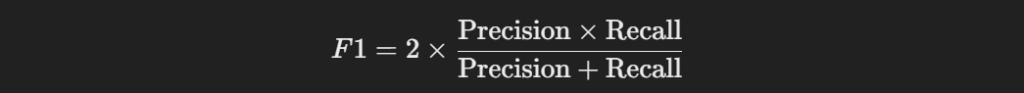

- F1-Score is the harmonic mean of precision and recall, providing a balance between the two:

- ROC-AUC (Receiver Operating Characteristic – Area Under Curve) assesses the trade-off between true positive rate and false positive rate across different thresholds.

You can use these metrics as follows:

- Use Precision when false positives are costly (e.g., spam detection).

- Use Recall when false negatives are critical (e.g., disease detection).

- Use F1-Score when you need a balance between precision and recall.

- Use ROC-AUC to evaluate models across different thresholds.

See also: Core AI interview questions

25. How do you deploy machine learning models in production, and what are the challenges?

Deploying machine learning models involves several steps, including:

- Model Serialization: Saving the trained model using formats like Pickle or ONNX.

- Serving the Model: Using frameworks like Flask, FastAPI, or TensorFlow Serving to create an API endpoint.

- Monitoring: Implementing logging and monitoring tools to track model performance in real-time.

- Scaling: Using platforms like Docker or Kubernetes to ensure the model can handle varying loads.

Challenges:

- Versioning: Managing different model versions.

- Data Drift: Adapting the model to changes in incoming data distributions.

- Latency: Ensuring quick response times for predictions.

- Integration: Seamlessly integrating the model with existing systems and workflows.

Here’s a simple Flask example for deploying a model:

from flask import Flask, request, jsonify

import pickle

# Load the model

with open('model.pkl', 'rb') as f:

model = pickle.load(f)

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict():

data = request.json

prediction = model.predict([data['features']])

return jsonify({'prediction': prediction.tolist()})

if __name__ == '__main__':

app.run(debug=True)This code provides a straightforward way to deploy a machine learning model as a REST API, allowing for easy integration with front-end applications.

Conclusion

In navigating the landscape of Machine Learning in AI Interview Questions, I recognize the pivotal role that this knowledge plays in shaping my career in artificial intelligence. The questions not only encompass the foundational aspects of machine learning, such as the distinctions between supervised and unsupervised learning, but also dive deep into advanced methodologies like ensemble techniques and neural networks. This breadth of understanding is essential for standing out in interviews and demonstrating my capability to tackle real-world challenges, a skill highly sought after by employers in this rapidly evolving field.

Moreover, the integration of practical coding examples alongside theoretical concepts empowers me to articulate my knowledge confidently and effectively. This hands-on experience bridges the gap between theory and application, ensuring that I can translate complex algorithms into actionable solutions. As I prepare for my upcoming interviews, I feel a renewed sense of determination, knowing that my expertise in machine learning not only enhances my employability but also opens doors to innovative opportunities and competitive salaries in the AI industry. With the right preparation and a solid grasp of these topics, I am poised to make a lasting impression on prospective employers and contribute meaningfully to the future of technology.